Introduction

For a while now, I have been fascinated by the capabilities and progress of text-to-speech (TTS) engines. My interest goes back to the early 2000s using Visual Basic, when Microsoft offered a software development kit that included both text-to-speech and speech-to-text functionalities. I think Microsoft referred to this as “Project Whisper”, though I may be confusing this name with a current OpenAI effort. It provided developers with tools for both predefined speech prompts and dictation features that competed with other voice recognition solutions of the era. The intent was to make your application more accessible.

Text-to-speech technology itself has a much longer history. One of its early milestones occurred in 1961, when engineers John Larry Kelly Jr. and Louis Gerstman generated a computer performance of Daisy Bell on the IBM 704. This pioneering demonstration paved the way for the sophisticated and natural-sounding TTS systems we encounter today.

The field of text-to-speech is vibrant and continually evolving, with different providers regularly taking the lead in terms of usability, quality, and features. In this discussion, we will explore user-friendly options currently available from platforms such as Canva, Adobe, and other third-party services. Additionally, we will delve into the possibilities of leveraging Google Cloud's API with Python to create custom TTS solutions, providing both flexibility and powerful functionality for a range of applications.

What are the Practical Uses of Generated Speech?

If you spend any time scrolling through Instagram Reels or YouTube Shorts, you’ll quickly notice that a growing number of creators use AI voices to set the scene for their videos. This trend isn’t just a matter of convenience; for many (myself included) the prospect of recording one’s own narration can be annoying. There’s something about hearing your own voice in a voiceover that exposes every little imperfection, making it hard to feel satisfied with the result. Strangely, I have no such concerns when participating in a live audio space on X (Twitter) or other recorded event where conversation is involved. It’s only during the process of crafting that perfect narration where self-criticisms seems amplified.

Given these challenges, it’s understandable why so many opt to offload narration duties. Whether you hire a professional voice artist on Fiverr or tap into the ever-expanding world of AI-generated speech, the motivation is ultimately the same: to create compelling content without the personal hang-ups that come with self-narration. Canva, in particular, has emerged as a go-to platform for social media creators. Its built-in TTS options have become a kind of stylistic signature for certain genres of short-form content. In fact, if an AI voice becomes too realistic (approaching what some call the Uncanny Valley) it can even backfire, feeling unsettling and out of place in the fast, informal world of social feeds.

Still, there are spaces where highly naturalistic TTS voices are not only appropriate but desirable. Picture the needs of instructional designers, corporate trainers, or anyone producing branded video content on a scale. For these creators, consistency and professionalism are paramount, and TTS technology is increasingly up to the task. It’s enormously practical for generating businesslike outgoing voicemail messages, onboarding materials, or product explainers, especially when you want high-quality results without the ongoing search for the perfect human voiceover artist.

The range of TTS options today means there’s a solution for almost every creative scenario. From purposefully synthetic narration for social content, to lifelike spoken audio for business, the technology is versatile enough to meet a diverse array of needs and preferences.

Commercial Options

When it comes to commercial text-to-speech solutions, the landscape is broad and varied, catering to everything from casual content creation to extensive professional use. Canva stands out for integrating a basic TTS generator directly into its platform, accessible to users working on social media projects or presentations. Though I don’t personally use Canva, my brief experience testing the platform revealed straightforward voice options: a standard male and female voice are available to all users at no extra charge, each delivering that familiar, slightly robotic tone that gets the job done. There are also premium voices available (one male, one female) which I suspect offer a more natural sound, though I haven’t had the chance to explore them.

On the Adobe side, things feel a little less developed by comparison. Despite Adobe’s prominent positioning of artificial intelligence within its Creative Cloud suite, text-to-speech and voice synthesis haven’t received quite the same attention. Adobe Audition, their audio editing flagship, includes a speech generator, but from my tests, it seems to simply draw from the operating system’s built-in voices. The result is reminiscent of the early 2000’s Microsoft SDK I mentioned earlier. It’s functional, but far from inspiring. For those using Adobe Express (Adobe’s Canva alternative), third-party plug-ins are the only option for AI voice generation. However, it’s difficult to determine if Adobe actually subsidizes some sort of “freemium” from these 3rd parties. My own attempt with Voiceover by Autopilot quickly ran into limits: after using just 59 out of 400 available characters, it became unclear whether this was a monthly cap or a one-time trial. Additional characters could be purchased (for instance, 1,000 more for $5) but the details clarifying whether this was a one-time or recurring billing were nowhere to be found.

Exploring beyond these platforms, a simple web search brings up a maze of AI speech generation services, many of which vary widely in both quality and transparency. It’s understandable that these businesses need to monetize, so the drive to have you subscribe and limitations are to be expected. What’s surprising is how some providers complicate the process with upfront surveys or unclear feature sets, often requiring users to describe their intended use before offering a sample. If I’m forced to answer a questionnaire before I can sample the goods, personally - I’ll bail.

Entry pricing across the sector typically falls in the range of $20 to $30 USD per month, though there seems to be little correlation between cost and the sophistication of the underlying technology. Some services deliver impressively modern, nuanced speech synthesis, while others feel noticeably dated, even at similar price points.

For example, Typecast.ai offers a free plan that allows prospective users to explore its features, while paid tiers unlock increased functionality. Their $9-per-month plan grants access to download history but restricts voice control, whereas the $33-per-month option promises full voice customization, though it’s unclear if this is the same level of control offered on the demo page which is four emotions and some pacing.

Voice cloning is another emerging feature, enabling users to replicate their own voice for automated narration. While I haven’t experimented with voice cloning myself, it’s reasonable to assume that these services require a controlled script to prevent misuse, such as unauthorized recreations of well-known voices (such as the late, legendary James Earl Jones, for example) from archival recordings. And again, if you’re critical of hearing your own voice perform narration, this may not be the solution you’re looking for.

The commercial TTS market seems to be a patchwork of solutions, each with its strengths and quirks. Whether you’re seeking quick, no-frills narration or advanced, customizable voice options, it pays to approach each platform with a critical eye. You’ll need to test demo versions, read the fine print, and evaluate which tool best matches your creative objectives. This takes us to the “roll your own” approach…

Using the Cloud API

You will need to take a few preliminary steps to enable Google Cloud API usage. This assumes you already have a Google Account set up. Additionally, be aware that high (commercial level) usage can lead to billing.

Billing and Payment (Just in Case)

For the scope of a solo developer, or following along with this tutorial, it would be difficult to meet the monthly billing threshold for usage of the Google Cloud API. Google asks for payment methods (assuming you’re not already paying for another Google service on this account) to limit abuse and “fly-by” abuse/usage.

Keep in mind, that this is the same API that could be used by a full fledged application, hence the efforts by Google to ensure payment is in place in case your usage would skyrocket. On that note, keep your API key secure or delete your project/service account in the Google Cloud Console when you’re done testing things.

Google offers a billing calculator, however it has not been yet updated to reference the Chirp 3 HD voices that we’re using below. Additionally, it only illustrates per million estimates, while documentation states that you are billed at true “per character” rates. The calculator shows that at one million characters or less (subbing “Wavenet” voices due to the omission of Chirp 3) – we would pay nothing.

Voice Selection

I recommend viewing two documents on this topic. One explains the various technology class (types) of voices that Google offers. The other offers examples on the specific voices available for our Chirp 3 HD voices.

Under List Voices and Types, we can see the types of voices offered.

For this post, I opted for Chirp 3: HD Voices – as this seems to be the closest to the more natural language chat bots that you may be familiar with. If you use Neural2, you may be able to get a decent level of control using SSML (Speech Synthesis Markup Language). However, I found Neural2 to be a bit robot-like for my tastes.

I’ve added a Neural2 Python script in the code repository, as well as sample SSML. This is provided in case your personal solution could benefit more using the Neural2/SSML approach. However, I’ll be working mostly with Chirp 3: HD Voices moving forward for this post.

Chirp 3: HD Voices offers less precise control over the spoken output compared to the other options. Instead, the results rely on language model training and may introduce slight variations each time you use the API, even with the same script. This subtle randomness can be beneficial, much like when a voiceover artist records several takes of a short script to ensure at least one version sounds just right. The natural variation helps mimic that experience, making each output feel a bit more unique and lifelike.

Hey! I’m going to interrupt myself to say, if you enjoy this type of content or find it helpful (please browse my other articles as well), a one-time tip in any amount of your choosing is always appreciated.

Use Stripe’s secure payment options and see “change amount”. You can use Stripe’s Link service or Credit Card.

Setting Up Google Cloud for the First Time

If you haven’t used any Google Cloud API previously, you’ll need to set this up. I have the generalized process below. I didn’t take the time to grab screenshots of each step. Instead, I’m keeping the instructions mostly generalized. I’ve noticed that Google tends to update their interfaces quite often; not even Gemini seems to have a recent understanding of the current interfaces.

So, it’s quite possible that if you’ve stumbled on to this post later on in 2025 or beyond, the interface may have already changed once again.

Enable Google Cloud usage for your account

Sign in to https://console.cloud.google.com/ and accept terms.

Billing must be set up, even for free-tier use. If you stay within quota limits, you may not be charged.

Create a new project

All resources (APIs, credentials, billing, etc.) are scoped to a project.

Some commonly used APIs may be pre-enabled, but most (like Cloud Text-to-Speech) must be manually enabled.

Enable the desired API

For example, Cloud Text-to-Speech API must be enabled for your project.

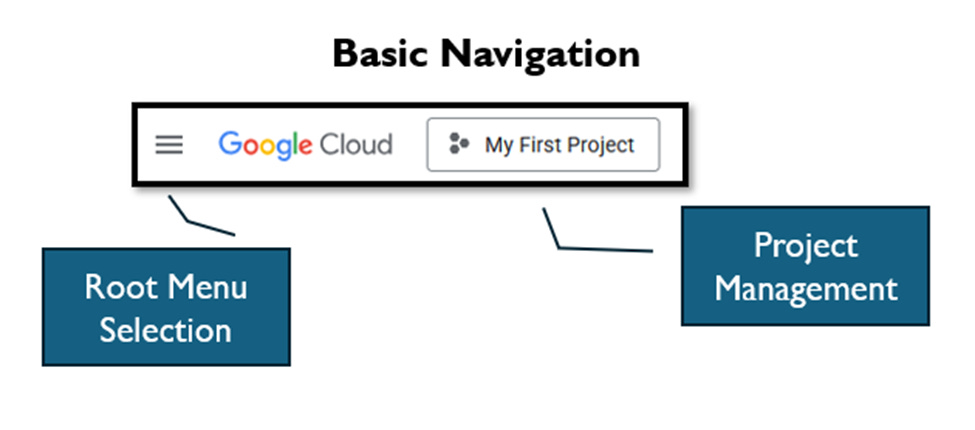

(Root Menu -> APIs and Services -> Enabled APIs & Services … then click + Enable APIs and Services in blue at the top of the screen to ensure Cloud Text-To-Speech API is enabled for this project)

Create a service account

This identity is used by your code or app to authenticate securely.

Grant this service account the necessary roles (e.g. Text-to-Speech User).

(Root Menu -> IAM & Admin -> Service Accounts, and then again note the blue +Create Service Account at the top of the screen).

Note, when creating the service account, I couldn’t seem to get clear or current documentation on the optional Permissions and Principals. When I pressed the various GPTs for any details, it seems like the common convention is to skip these specifications – which I’m personally not a fan of unless I fully understand why. This is another reason to ensure you keep your json file secure.

Generate and download a JSON key

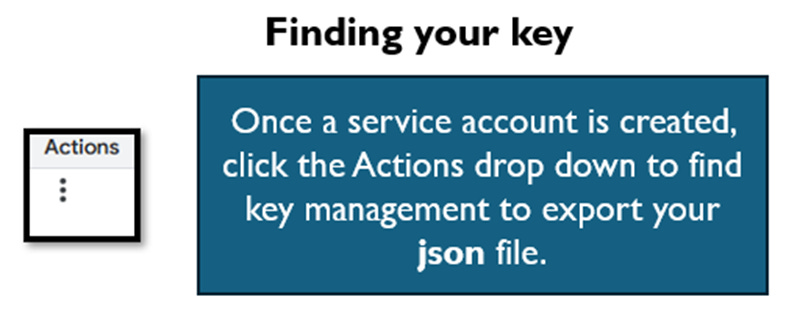

Next to your list of service accounts, note the “three dots” under Actions. This is where you can find the option to manage your key.

This file acts as your API credential, allowing your code to access the API under the context of that service account.

Once you have this initial setup done, you can use the script below. Note, in the Console, you can check under billing to set up alerts if you want to set up a budget notification, if you see yourself using the service moving forward and want to make sure that you don’t get any shocks on your credit card statement. Again, I don’t see a solo developer getting billed for light usage in this scenario – but it makes sense to be conscious of this as you have authorized Google to bill in case of usage growth. If you don’t want to have this billing open-ended, delete the service account of the project itself.

The Code

Once again, you can clone the code from our ongoing utility repository on github. Today’s code is under google-cloud-voice-api.

[Parent]

├── generate.py # Default: plain text / Chirp 3: HD voice

├── generate-ssml.py # Expects SSML and uses Neural2 voice

├── generate-test.py # Tests for initial setup

└── requirements.txt # Python dependenciesHere’s the code for the Chirp 3: HD Voices script. Note, the only thing that’s really changing here is the name of the voice passed to API. I have one script that expects SSML and one that expects a flat text file as a script. There’s nothing stopping anyone from modifying this Python to automatically figure out if the input script is one of the two different formats (text or SSML).

# generate.py

import argparse

import os

from google.cloud import texttospeech

"""

Use this to quickly test Google Cloud Text-to-Speech API with Despina HD voice using natural text from file input.

see requirements.txt for dependencies (google-cloud-texttospeech)

Browse voices here: https://cloud.google.com/text-to-speech/docs/chirp3-hd

"""

def synthesize_speech_from_text(text: str, voice_name: str, output_file: str):

"""

Synthesize speech from the input string of text.

:param text:

:param voice_name:

:param output_file:

:return:

"""

client = texttospeech.TextToSpeechClient()

synthesis_input = texttospeech.SynthesisInput(text=text)

voice = texttospeech.VoiceSelectionParams(

language_code="en-US",

name=voice_name,

ssml_gender=texttospeech.SsmlVoiceGender.FEMALE

)

audio_config = texttospeech.AudioConfig(

audio_encoding=texttospeech.AudioEncoding.MP3

)

response = client.synthesize_speech(

input=synthesis_input,

voice=voice,

audio_config=audio_config

)

with open(output_file, "wb") as out:

out.write(response.audio_content)

print(f"Audio content written to '{output_file}'")

def parse_arguments():

"""

Parse command line arguments.

:return:

"""

parser = argparse.ArgumentParser(description="Convert plain text file to speech using Google Cloud Text-to-Speech API.")

parser.add_argument("--credentials", required=True, help="Path to your Google Cloud JSON key file.")

parser.add_argument("--output", required=True, help="Output MP3 file path.")

parser.add_argument("--input", required=True, help="Path to plain text file containing input.")

parser.add_argument("--voice", default="en-US-Chirp3-HD-Despina", help="Voice name (e.g., en-US-Chirp3-HD-Despina).")

return parser.parse_args()

def main():

"""

Main function to parse arguments and call the synthesis function.

:return:

"""

args = parse_arguments()

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = args.credentials

with open(args.input, "r", encoding="utf-8") as f:

text_content = f.read()

synthesize_speech_from_text(text=text_content, voice_name=args.voice, output_file=args.output)

if __name__ == "__main__":

main()

Here is some SSML to test the Neural2 version (generate-ssml.py) if you opt to do so.

<speak>

Hello, this is a demonstration of using the Google Cloud Text to Speech engine.

<break time="500ms"/>

I'm one of the Neural2 voices, which means you can modulate my speech patterns using

<say-as interpret-as="characters">SSML</say-as>.

<break time="700ms"/>

By using <say-as interpret-as="characters">SSML</say-as>, you can format my speech patterns to your liking.

<break time="700ms"/>

<prosody pitch="+2st">

<emphasis level="moderate">For example, I've been instructed to say this line with raised pitch.</emphasis>

</prosody>

<break time="700ms"/>

<prosody rate="slow">

<emphasis level="strong">I've been instructed to say this line slowly, with emphasis.</emphasis>

</prosody>

<break time="700ms"/>

<prosody pitch="+4st" volume="loud">

<emphasis level="strong">I'm saying this line with raised pitch and volume.</emphasis>

</prosody>

<break time="700ms"/>

Perhaps you need me to read a countdown. Here, I can perform a five second countdown.

<break time="300ms"/>

<say-as interpret-as="cardinal">5</say-as>

<break time="1s"/>

<say-as interpret-as="cardinal">4</say-as>

<break time="1s"/>

<say-as interpret-as="cardinal">3</say-as>

<break time="1s"/>

<say-as interpret-as="cardinal">2</say-as>

<break time="1s"/>

<say-as interpret-as="cardinal">1</say-as>.

</speak>

The command line to use these two versions would be as follows.

For Chirp 3: HD and a flat text script:

python generate.py --credentials [path to json] --input script.txt --output chrip3-sample.mp3For the Neural 2 and SSML version:

python generate.py --credentials [path to json] --input sample.ssml --output neural2-ssml.mp3The Result

As describe earlier, I ran the script twice through the API twice to get a result that sounded a little bit better in my subjective opinion. Throughout this effort, I haven’t nailed down a consistent/proper way to get it to pronounce numbers (whether it’s a confirmation number or a phone number). Note how the phone number is pronounced in this example. Perhaps the answer is to use commas rather than hyphens (not tested).

Here’s a video showing the result as compared to the script.

As you’ll note when hearing this, it’s not perfect. However, for times when you need a quick voiceover, I believe this would fit the needs of many.

Conclusion

Text-to-speech technology has advanced significantly, offering a wide range of tools for creators, educators, developers, and businesses. While many web-based platforms cater to quick, polished outputs through simple interfaces, these often come with subscription fees, limited functionality, and unclear usage caps. In contrast, using the Google Cloud Text-to-Speech API provides a more flexible and potentially more economical path. For users comfortable with light development work, it allows fine-tuned results with per-character billing and no commitment to a monthly plan.

For creators who value customization, scalability, or simply want to avoid the unpredictability of freemium services, the Cloud API presents a compelling alternative. Initial setup does require some configuration, but the payoff is clear. You gain control over the output, access to advanced voices like Chirp 3 HD, and pricing that often stays below what commercial platforms charge for similar usage. As TTS technology continues to improve, building your own workflow may not only lead to better results but also keep your costs low.